Degrading Behavior: Graceful Integration

There has been a lot of talk about graceful degradation. In the end it can become a lot of lip service. Often people talk a good talk, but when the site hits the web, let’s just say it isn’t too pretty.

Engineers and designers work together, or divided as the case may be, to create an experience that users with all of their faculties and a modern browser can enjoy. While this goes down, the rest of the world is left feeling a bit chilly.

What happens is, the design starts with the best of intentions and, then, the interactivity bug takes hold. What comes out is something that is almost usable when slightly degraded, but totally non-functional when degraded to the minimum.

In the end, of course, the user needs must be considered. Having a graphically intensive site doesn’t hurt if you are a design firm, but might not be the best option for a company dealing with medical customers.

My suggestion, however, is simple. Instead of degrading your site as the user needs become greater, start with a site that is usable at the lowest common denominator and work up.

Understand who your audience is and prepare to serve those of them who have the greatest needs. Build a site that is attractive and works if the user can only view it through a program that reads for them.

Once your site has been built and is fully functional, build up. Integrate functions to serve the users that have some needs, but aren’t in the highest-need area on the spectrum.

Continue on this path until you have integrated all of the latest and greatest, bleeding-edge functions that your highest-ability users can interact with.

As you build this way, be sure that you are simply enhancing the function that is already on the screen. As you add enhancements, you should be able to remove them cleanly and still get the same experience you started with.

I call this approach graceful integration. Each progressive step, you integrate more functionality and interaction, preserving the layer just below as a separate user interaction.

Each progressive enhancement should be separate and easily disabled. The granularity of your enhancements can be as large as a two-step approach: all or nothing. You may also enhance your site in a careful way that allows for several levels of degradation depending on the user and their distinct needs.

A natural separation of user interaction happens when HTML is written, CSS is added and Javascript acts upon the subsequent design. This three-tier separation, you can use the work that will be done regardless to define your user interaction in a clean way.

One of the best quotes I’ve heard regarding graceful degradation, in paraphrase, is, “if you show it with Javascript, hide it with Javascript.” I offer this with no source as I can’t recall where I heard it. If you know/are the author of this, stand up and reap the rewards.

The attitude in this quote represents the core of graceful integration. As you pile the Javascript on, be sure you manage its behavior with Javascript. Don’t use CSS to manage something that is going to be handled with Javascript. If you must, prepare a style sheet that defines scripted behavior, but set the object classes with Javascript.

There are other benefits you’ll gain from this approach. Not only will your users appreciate the time and care you put into their experience, so will the search engines.

As search spiders crawl your site, they won’t see any CSS that looks to be doing something sneaky like hiding text from the viewer. Spiders have gotten smarter and they recognize when something fishy is going on. As you are already doing something good for your users, you get this bonus for free.

For those of you looking for snippets of code, the best thing you could know and rely on is the “noscript” tag. This is a tag which defines the behavior of the page for users without Javascript. I use this quote a bit to display extra form controls when Javascript has been disabled or is unavailable to the user. You can use it like the following:

<script type=”text/javascript”> //your script logic goes here </script> <noscript> <!– HTML elements go here like style definitions or form controls. –> </noscript>

You can also use noscript by itself scattered throughout the page to display page elements that might, otherwise, be missing for some of your users.

In the end, behavior on your site should be defined by the HTML first, the CSS second and the Javascript third. Should you choose to go to a finer granularity, don’t forget to double check your users can still use all of the layers effectively. Be mindful of your users, integrate function into your site in stages and make the web a better place.

Website Overhaul 12-Step Program

Suppose you’ve been tasked with overhauling your company website. This has been the source of dread and panic for creative and engineering teams the world over.

Some people, in the panic and shuffle, opt for the fast-and-loose approach. They start throwing anything they can at the site, hoping something will stick. Anything means ANYTHING. All marketing ideas go in the bucket, then the executive mandates, the creative odds and ends, some engineering goodness and all of that. This almost always results in a disaster.

Others look to collect everything that people want and need, do a ton of marketing research and then follow that up with user testing. Though this may lead to a usable site, this method probably won’t generate a site that actually solves user needs.

There are various other permutations on these ideas, including the design by committee, the hire a specialist and the design by marketing requirements approaches.

After suffering through most of these approaches, I devised a plan that is reasonable, easy to execute, offers answers to common questions. If executed, this will provide a smart, well designed, user-oriented site which also fulfills business needs.

I call my plan the Website Overhaul 12-Step Program. Let’s dive in.

Step 1: Install analytics and do nothing for three months.

This doesn’t mean don’t do standard updates to information and common, day-to-day operations. I simply mean, don’t design. Don’t workflow. Don’t create nav structures and hierarchy and all of that. Just sit on your hands and wait. For. Three. Months.

Step 2: Review Analytics Data and Collect Client Needs.

Once you have let the analytics bake for three months, review your results. Sometimes they back up what your marketing team is pushing for. More often, there are little surprises and gems you’d never anticipate.

Look for commonly viewed pages. Review common search terms. Watch for people seeking names, phone numbers, account data or anything else that might seem odd in a search. All of these items can give you insight into your user.

While reviewing your analytics data, collect stakeholder ideas, information and requirements. Include this information in your concerns moving forward. Be sure to balance your findings and temper stakeholder information with your analytics findings.

Step 3: Create an Information Hierarchy.

I don’t care what you think your navigation should be, think about the information you have and create a hierarchy of relations. Which documents are gateways to information? Which information is subordinate to other information? This is all useful in developing an information hierarchy for your site.

Step 4: Develop Core Navigation.

Now that you have your information hierarchy and months worth of synthesized analytics data, you can start developing your core navigation. Think about what your users need and what your marketing team wants to accomplish. Apply everything you know here. Think about taxonomy and make sure you have navigation consistency, both in vocabulary and in behavior. This is your first move in actually creating a site.

During your core navigation development, you should start using tools like card sorts and thesauri to solve copy concerns and ensure clear language and categorization. If need be, you can reassess your information hierarchy at this step.

Step 5: Uncover Special Needs Navigation.

This does not mean you need to start thinking about accessibility right now (though accessibility is VERY IMPORTANT). This means, you need to consider items which don’t fit in your core navigation structure, but answer questions your users have. Assess user special, non-core, needs and address them here. Make a list and associate the special needs navigation with specific pages.

Step 6: Wireframes.

Now that you have your navigation structure laid out and your special needs listed, you are ready to start creating a visual guide for the layout of the site. Consider your user, what they look for most, what they look for least and consider Fitts’s Law. Think about scanning behaviors. Build something that begs to be clicked on, even when it’s printed and bound.

Standard (and not-so-standard) user testing should happen here. Be sure to develop your wireframes with the correct granularity for your audience to ensure they don’t get stuck in the mud of details that are unimportant at this stage.

Step 7: Design.

Once wireframes have been created and approved, pass the site along to the design team for their magic touch. Let them bump, nudge and finesse your wireframes into a compelling presentation. If you work carefully with your design team, they can take genius and bring it to life. Let them.

Step 8: Develop Templates.

Volumes could be written on the topic of templates and content management systems, including my notes on Object Oriented Content. For now, let’s simplify the idea and say, templates are good. You should use them. They will make your life easier in the short and long run. Now is the time to build them. Include all of the design goodies from step 7.

Step 9: Implement Site Structure.

Once your templates are built, using all of the designs produced from your previous work, you are ready to start implementing your site structure. If you created solid templates, this step should be fairly simple and straightforward. Take advantage of the separation between your templates and your content. All your content should drop in and you can make tweaks to your presentation. You’re getting close.

Step 10: Test and Bug-Fix.

Once you have implemented the site structure, begin testing the site. Send it through QA. Look for broken links. Look for bad content. Find items that will be a deal-breaker for your users and repair it now. Ultimately you don’t want your user to find the flaws that you already thought of. Be aware and move forward in a smart way. By now, you should have already gotten stakeholder buy-in so they should not still be in the mix unless they are core to user testing.

Step 11: Deploy.

Once you have the final seal of approval from your testers, you should feel confident your site is ready for the prime-time. Launch it and let your users have a crack at it. Give yourself a pat on the back, your site is live.

I’m sure you’re wondering why everything launched at step 11, since this is, after all, a 12-step program. Well…

Step 12: Watch Analytics for New Trends.

Now that your site is live, watch for new trends in your analytics data. Are your users getting their questions answered or are they still having difficulties? Are your users visiting new pages? Is everything still working or are you experiencing technical issues? All of this will appear in your analytics data, so keep an eye out.

There are many small steps that fall within the scope of this 12-step process, but it is up to you to ensure you implement your specific needs and tests. If your stakeholders need you to keep them in the loop, make sure you let them know your process so you can get their input when it is going to be most useful.

Make sure the people involved in the process feel confident about your moves and get buy-in at the right times to facilitate a swift, fluid move through the process. If you move forward with confidence, you will be a hero to your coworkers and a champion of your users. Take control, build confidence and make the web a better place.

Pretend that they're Users

Working closely with the Creative team, as I do, I have the unique opportunity to consider user experience through the life of the project. More than many engineers, I work directly with the user. Developing wireframes, considering information architecture and user experience development all fall within my purview.

Typical engineers, on the other hand, live in a world separated and buffered from Creative and, subsequently, the user. They work with project managers, engineering supervisors and other layers of businesspeople that speak on behalf of the user, alienating the engineer from the world they affect.

It becomes easy to dissociate and refer to the user as clients and eventually abstract the interaction by focusing on the system and the software client that will interact with the functions being built. People as users become a vague notion that is hardly considered as functional pieces are built and pushed out.

This is an unfortunate circumstance as engineers exist solely for the purpose of building and supporting systems that people, somewhere, will be interacting with. People use computers. People use programs. People use web sites.

Even when working with Creative people, I still find myself getting lost in the code and forgetting that I eventually need to be able to interact with the user. I work on items that stimulate my brain, but don’t actually solve the user needs.

A popular design pattern on the web is MVC. I prefer this pattern myself. There are pitfalls in any pattern, but MVC allows me to work on three separate items in parallel.

My most recent, major project was constructing a content management system. I built this on Cake PHP. Cake is built around the idea of constructing the entire project on MVC principles.

The thing that happened as I built the system was I constructed an interface for the user to play with, first, then I built supporting architecture.

Recently I had a discussion with a friend of mine, he brought up the idea of constructing screens to show the user, first, then building the support system afterward. Funny how things seem to work out like that.

The point of all this is simple: engineers need to pretend like the clients are users, because they are. When building a function into a system, the engineer should ask, “how will this impact the user?”

Another way of stating this is, everything an engineer does, even at the deepest level of a system, will impact the user and their experience. From speed, to clean integration with a client-side interface, every line of code is important to the user.

The most important thing to keep in mind is that we are no longer in a world where bare forms and featureless landscapes of system interfaces are acceptable. Users don’t want to be reminded they are working on a machine built to do math really quickly.

Users don’t want to see an interface that is a direct expression of code, they want a smooth, human-like experience that leaves them with a feeling of satisfaction and ease.

Depending on how you view this challenge, it is either fortunate or unfortunate that building a system that anticipates user needs requires careful consideration and, potentially, more code.

The age of engineers that build programs in C and COBOL, which run exclusively on the command line is done. Engineers in the new era must be user-aware. They must anticipate user difficulties before they happen and prepare to guide the user, in a smart way, through the minefield

Current-era engineers are the gatekeepers to the computing experience. They hold the keys and empower the user to build confidence and manage the experience they have in a natural way.

Instead of being a guard, keeping common man out, be a host. Encourage your users to click, type and interact. Give them a wonderland. Pretend that they’re users, treat them like people and make the web a better place.

User Experience Means Everyone

I’ve been working on a project for an internal client, which includes linking out to various medical search utilities. One of the sites we are using as a search provider offers pharmacy searches. The site was built on ASP.Net technology, or so I would assume as all the file extensions are ‘aspx.’ I bring this provider up because I was shocked and appalled by their disregard for the users that would be searching.

This site, which shall remain unnamed, commits one of the greatest usability crimes I’ve seen: they rely only on Javascript to submit their search. In order to give you, dear reader, the scope of the issue, I always test sites like these by disabling Javascript and testing the function again.

The search stopped working.

Mind you, if this was some sort of specialized search geared toward people that were working with Javascript technology, I might be able to see requiring Javascript in order to make the search work properly. Even in circumstances like the aforementioned search, shutting down the search with Javascript disabled is still questionable.

This, however, is a search for local pharmacies.

Considering the users that might be searching for a pharmacy, we can compile a list. This is not comprehensive: the young, the elderly, the rich, the poor, sick people, healthy people, disabled people and blind people. I’ll stop there.

Let’s consider a couple of select groups in that list, i.e. the poor, the disabled and the blind. The less money you have the less likely you are to buy a new computer if your old one still works. I know this sounds funny, but I’ve seen people using Internet Explorer 5.5 to access sites in the insurance world. Lord knows what other antiques they might use to access a site. Suffice to say, people with old computers may not support the AJAX calls made by an AJAX only search.

Let’s, now, consider the two groups who are much larger than the IE 5.5 crowd: the disabled and blind. I separate these two so we can think about different situations for each.

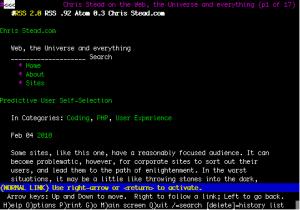

First, the blind. Blind people use screen readers to view web sites. Though I am unsure as to the latest capabilities of screen readers, but the last time I did reading about screen readers for the blind, I was brought to understand that their experience is a little like using Lynx. See a screencap below to get an idea of what Lynx is like.

[caption id=”attachment_182” align=”alignnone” width=”300” caption=”chrisstead.net on Lynx”] [/caption]

[/caption]

As you can see, browsing for the blind is kind a no-frills venture. No CSS, no Javascript, no imagery. Since many of them can’t see what you have made available, (yes, there are varying degrees of blindness) they have to rely on a program to read the screen for them. This means, pages that rely on Javascript for core functionality are out of reach for these users.

In much the same way, disabled users may have a limited set of functions they can access within their browser. This will depend on the degree of disability and the breadth of function on their browser. I can’t and won’t say what a disabled browsing experience is like since I am not disabled and the experience varies so widely it’s not possible to pin down what the overall experience is like. Suffice to say, it is limited.

Now, the reason I mentioned the site was built on ASP.Net: For whatever reason, the sites I see with the worst usability almost always seem to be built on ASP.Net. I have a hard time wrapping my head around this, as I’ve built ASP/C# apps and had no problem building the core functions to operate with or without Javascript enabled. Everything you need is right at your fingertips.

From sites that require users to be on a windows machine using the newest version of Internet Explorer, to web apps that require users have Javascript and images enabled just to navigate the core functions, ASP sites often seem to be bottom of the barrel.

Perhaps it is a group of people that are used to developing for desktop apps and haven’t had to consider usability in the modern age of the web. Perhaps it’s novice developers that don’t understand some of the core concepts that go into building successful web applications. Either way, the current trend of ASP disabled-inaccessibility must come to an end.

To the ASP.Net developers of the world, I implore you to reconsider your development goals and meet the needs of your customers. To the rest of you that may be committing the same sins in another language, I beg you to be considerate of all of your users, instead of a select group. Think about usability for a degraded experience, build accordingly and make the web a better place.

Predictive User Self-Selection

Some sites, like this one, have a reasonably focused audience. It can become problematic, however, for corporate sites to sort out their users, and lead them to the path of enlightenment. In the worst situations, it may be a little like throwing stones into the dark, hoping to hit a matchstick. In the best, users will wander in and tell you precisely who they are.

Fortunately, users often leave hints as to who they are without knowing it. They (hopefully) travel through your site, touching certain pages and avoiding others. They also arrive from somewhere.

When trying to select your user and direct them, your initial response may be to directly ask them who they are and what they want. This works well if you are an e-tailer like Amazon, but the rest of us don’t have quite the same luxury.

If you are planning on selecting the user once they get to your site, you are already too late and they have left. You should know something about them before they every arrive. This, surprisingly, is not just about knowing your audience. It’s also what they can tell you.

Yes, your users choose to tell you something before they arrive at your site.

To learn about your users sub rosa, you need look no further than the HTTP referer. From the HTTP referer, you can find out where your user came from and, hopefully, something about what they want.

If your user accessed your site directly, you know they are either a returning visitor or they were recommended by someone. If they arrived at a landing page, it is, undoubtedly, due to some marketing effort. This, however, says the least about the user.

If they came from another website and not a search engine, you’ll know they were reading another site related to yours. Perhaps this is a competitor. Perhaps this is a colleague. Either way, you know they have come to your site because they are interested in the link they clicked through to.

The most directly informative method a user can use to access your site is through search engines. You can gather, immediately, that your user was interested in something directly related to your site. You also know your user is actively seeking something. Finally, your user told the search engine what they wanted and, subsequently, they told you.

How?

The HTTP referer. The referer is passed with every GET request and tells the server about where you have been. Don’t get ahead of yourself. The referer only informs you of the last place your user was. In our current case, a search engine.

The big four search engines, AKA Google, Yahoo, AOL and Bing (MSN), all use get arguments to store information about the current search. This makes it easy to clip the information you want, like a coupon, from the HTTP referer string.

Note: The next bit of this discussion involves a little code. If you’re not comfortable with programming in the popular language PHP or this just isn’t your job, copy and paste the following into an e-mail and send it to your developer.

In PHP, you can collect user referer information with the following line of code:

$referer = $_SERVER["HTTP_REFERER"]; ```

Once you have the referer stored, you can test it against the big four. One way of doing this might be like the following:$refererType = "other"; $searchEngines = array("aol", "bing", "google", "yahoo"); foreach($seachEngine as $value){ if(preg_match("/$value/i", $referer) !== false){ $refererType = $value; break; } } ```

Now we've got the search engine they used and a referer string. This is prime time for extracting their search query and figuring out who your user really is. Hopefully this won't turn into a Scooby Doo episode, where Old Man Withers is haunting your site. Let's do some data extraction:$queryKey = ($refererType == "yahoo") ? 'p' : 'q'; $pattern = "/(\\?|\\&)$queryKey\\=/"; $argStart = preg_match($pattern, $referer) + 3; $argLen = preg_match("/(\\&|\\$)/", $referer, $argStart) - $argStart; $arg = urldecode(substr($referer, $argStart, $argLen)); $argArray = explode('+', $arg); ```

Still with me? The code part is over. Now that you have the search information and it's been broken into happy, bite-sized chunks, you can use it to do all kinds of fun things with your user. You can check their spelling, to ensure they are in the right place. You can offer special links that relate to what they searched for. You can even adjust the page to better suit the user's needs. The possibilities are limitless. By knowing a little about what the user did before they arrived at your site, you can direct their journey through your site. It is in your hands to find interesting and creative uses for this information. Go and make the web a better place.